Holodeck

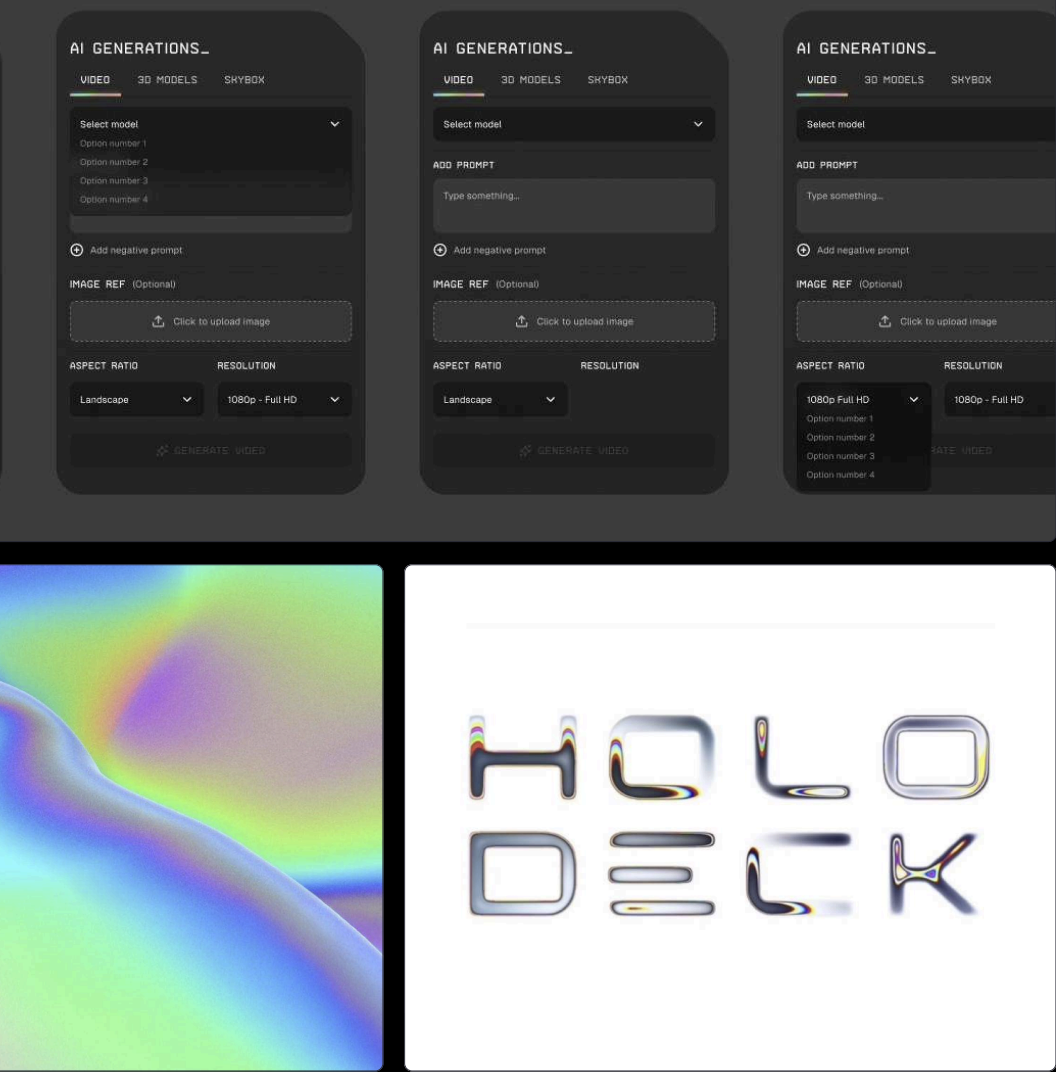

3D Canvas for AI-Generated Media. Video generation tools output flat files to flat interfaces. Holodeck renders them in spatial context — treating 3D space as the primary UI for composition, curation, and immersive web experiences.

The Thesis

AI video generators (Runway, Kling, Luma) create isolated clips. No spatial relationship between outputs. No composition context. Holodeck places generated media on screens within a navigable 3D environment — positioning this at the intersection of Krea's generation interface and Spline's 3D web canvas.

Team

Built with a distributed team: UI/UX designer (Figma components and interaction patterns), 3D modeller (environment assets and character rig), and myself on character design, system architecture, and implementation. HoloDesign component library translated from Figma to production React.

Client Validation

- •Founders and initial test users praised selected design direction

- •Features exceeded expectations — over-delivered on every metric

- •Help panels with instructions shaped by user onboarding feedback

- •Scene variety and icon-based navigation improved from early testing

- •Very satisfied client returned for pitch deck assistance

- •Continued engagement led to team invitation

The Challenge

Multi-provider video generation creates fragmented workflows. Running Runway, Kling, Luma, and image models simultaneously: each outputs to different formats, different folders, different interfaces. How do you unify parallel outputs into a coherent spatial narrative?

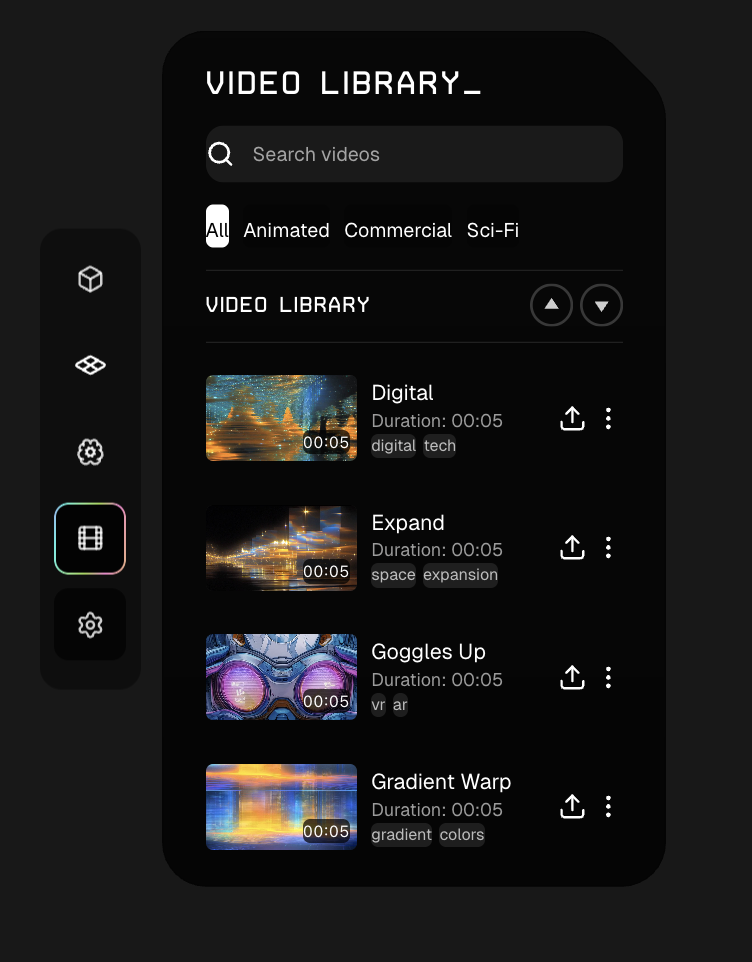

Solution: 3D environment as aggregation layer. Video outputs appear on floating screens within a navigable world. Walk between generations. Compare in spatial context. Compose scenes by positioning assets in 3D space. Same interface handles video, 3D models, and procedural skyboxes.

The Category: Spatial Productivity

Same generation, different context. The environment abstracts:

- •Video screens on curved and flat geometry

- •3D primitives (cubes, spheres, tori) for scene building

- •GLSL skyboxes with depth parallax

- •Character navigation (WASD, jump, sprint)

- •Panel system for asset management

You're not managing files — you're composing in space.

Design Decisions

- —Space as Interface: Replaced 2D panels with navigable 3D environment. Walk between video outputs. Position screens in physical relationship to each other.

- —Component Architecture: HoloDesign library — 132 custom components including 15 complex control panels ported from Figma. Glassmorphic cards, holographic gradients, 30+ design tokens. Extends shadcn philosophy.

- —Panel-to-Scene Workflow: Side panel spawns primitives, screens, models directly into 3D space. Figma designs translate to spatial components via consistent prop interfaces.

- —Video Screen System: Curved geometry renders video textures. Independent playback per screen. Playlist support for queued content. HLS streaming for large files.

- —Shader Pipeline: 8 custom GLSL/TSL shaders — depth skyboxes (parallax from AI depth maps), procedural nebulas, volumetric fog, alpha feathering. Mobile-optimized variants. Runtime parameter control.

- —State Architecture: 6 Zustand stores with debounced saves and cloud sync. Export/import JSON configs.

- —Character System: Controllable 3D Holo character navigates the space — WASD movement, jump, sprint. Designed for spatial exploration of curated content.

System Flow

Pipeline

AI Providers

Runway, Kling, Luma

Panel System

Tabbed UI

3D Scene

R3F Canvas

Edge Storage

Cloudflare

Design System

Built for R3F and web canvas — 100+ custom components, 15 complex control panels ported from Figma, 30+ design tokens. HoloDesign extends shadcn with glassmorphic cards, holographic gradients, and spatial primitives. Moving components from Figma to production with Claude Code — consistent design system made this workflow possible. 20K+ lines of production code across components.

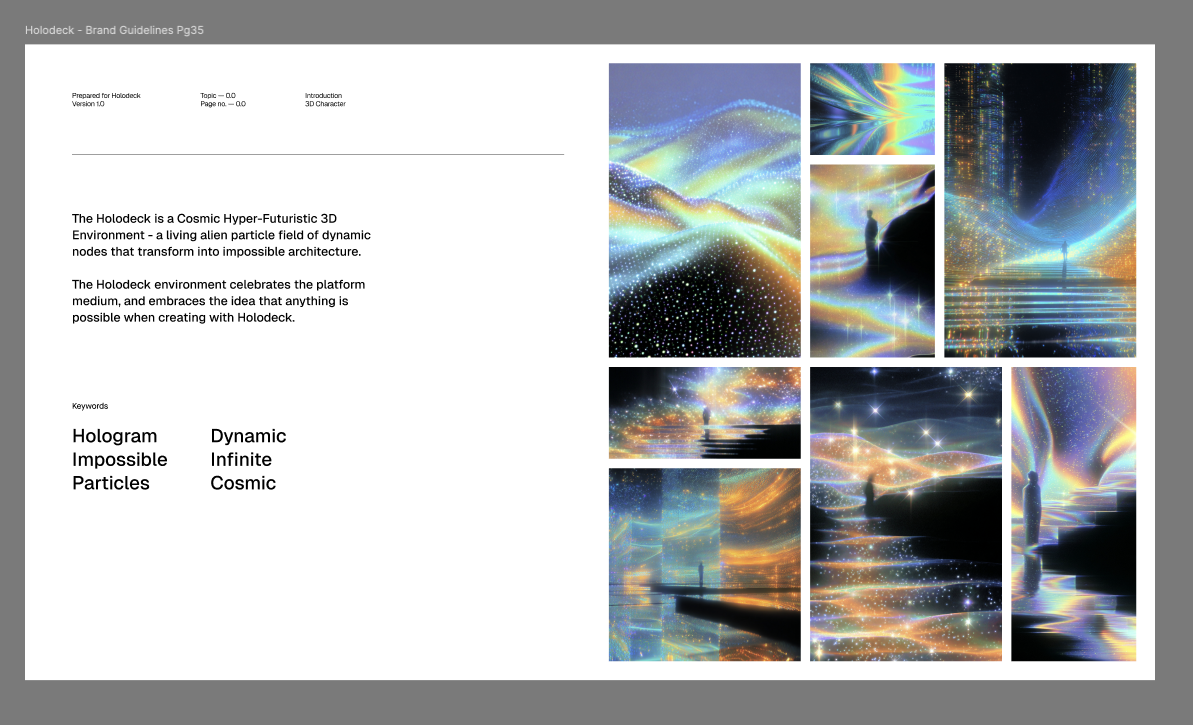

Character Design

Project's main character — a humanised agent depicted as guide and helper for navigating the space. Designed alongside branding materials as a wise maker of infinite ideas, spawning creations with a click of the fingers.

Collaborating with 3D modeller and UI designer, we locked in colors, brand identity, and optimization as a game-ready asset. Controlled via character controller in R3F with a full animation set.

System Architecture

| 3D Engine | Scene rendering, physics, navigation | React Three Fiber, Rapier |

| Component System | HoloDesign UI library | React 19, Framer Motion |

| State | Scene config, 200+ actions | Zustand, localStorage persist |

| Backend | Auth, scenes, asset storage | Cloudflare Workers, D1, R2 |

| Shaders | Skybox depth, procedural effects | Custom GLSL |

Key Innovation

- 1.Video generation outputs become spatial objects

- 2.Place a Runway clip on the left wall, a Kling output on the right, a Luma generation overhead — compare in physical context

- 3.Walk through your generations

- 4.Compose by positioning

It's 3D space as creative interface — positioning AI-generated media in navigable environments for curation, composition, and immersive web experiences.

Links

Stack

React Three Fiber • Three.js • Rapier Physics • React 19 • Framer Motion • Zustand • Cloudflare Workers/D1/R2 • Custom GLSL